“PACE”: Precise Ansychronous Collaboration in Extended Reality

Project Objective

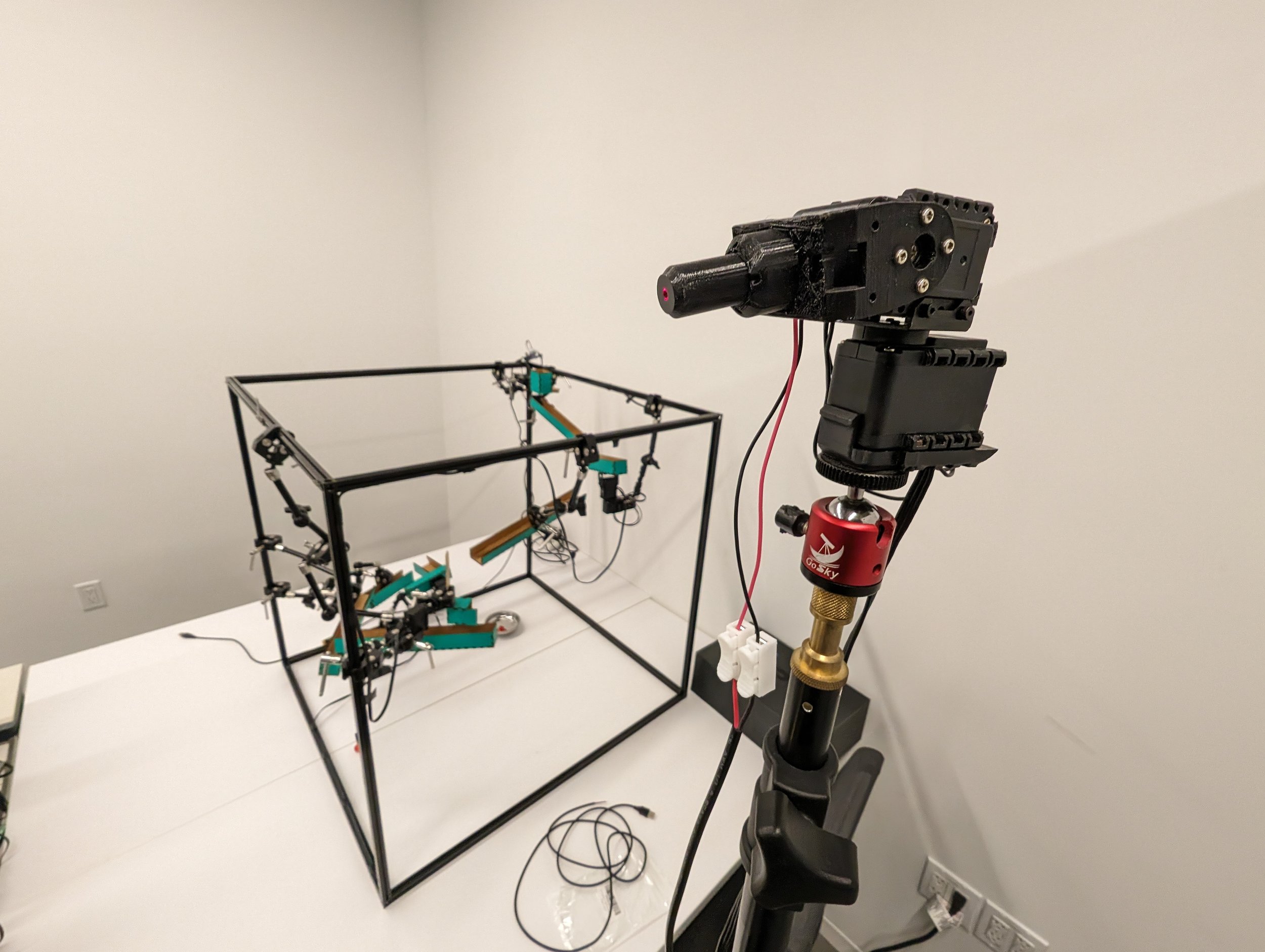

he Precise Asynchronous Collaboration in Extended-Reality ("PACE") independent research project allows users to point at virtual planes in a real-world environment where their target(s) are tracked by a real-world two-degree freedom motor system equipped with a laser diode. The user's virtual pointer is tracked by the real-world laser for asynchronous playback by other users. This project will enable users equipped with a headset to record their movements for users to learn from their actions later.

The long-term objective of this project is to provide a way for a user (SME) to record their movements and using a laser “turret” system built using Linux ROS2 and a 2-DOF Dynamixel motor system. Later, another user can replay the actions and audio of the SME and see a laser pointer on the real-world objects while the audio explains a task.

Wireless ROS2 Motor Control using Unity and Meta Quest 3 Passthrough

PACE allows for a 2-DOF laser system to track a user as they move around a workspace. This will continue to be extended to track ray cast surface collisions with the spatial map of the user’s real world environment.

Here, you can see an early prototyping version of the project. Initially, I built a Unity scene for VR which would allow me to test the ROS2 server communication and test the ‘turrets’ movements with respect to emulated player and controller game objects.

Another video from a different angle showing the early tests of the 2-DOF motor system and ROS2 wireless communication.